Many organizations, large and small, are beginning to transition from Universal Analytics (UA) to Google Analytics 4 (GA4) for their reporting. Similar to any platform change, whether it’s GA4, Adobe Analytics, or another vendor, similar questions come up during the process:

- How is reporting similar/different between platforms?

- If different, why, how much, and why don’t my numbers match?

- How do we map old reporting to new?

- What are the implications downstream for my reporting? (Hint check out this webinar we did about planning for your transition from UA to GA4.)

Understanding the “Why”

InfoTrust has helped lead many clients through platform transitions, but with GA4, it’s even easier to make the switch and prepare your stakeholders. In my experience, the answers to the questions above will be addressed at some point. Most important is when, and the sooner you know, the best you can prepare your stakeholders and internal teams and ensure a smooth transition. The least opportune time to find out is after you’ve released your first new report from GA4 and raise concerns about your new implementation! For instance, we know the methodology for collecting pageviews has changed in GA4 to unique views and will be inherently less than UA, so letting them know sooner rather than later will build credibility. However, there can also be differences in the implementation, and perhaps a developer forgot about a datalayer bug that’s only being looked at because they’re trying to implement a new GA4 feature. These are situations that can keep any analyst up at night and, through the magic of dashboards and automation, we help our stakeholders know sooner rather than later, so analysts can have a great night’s sleep. InfoTrust can also provide a unique perspective given that we work with a variety of clients and know what differences to expect.

Making a Comparison

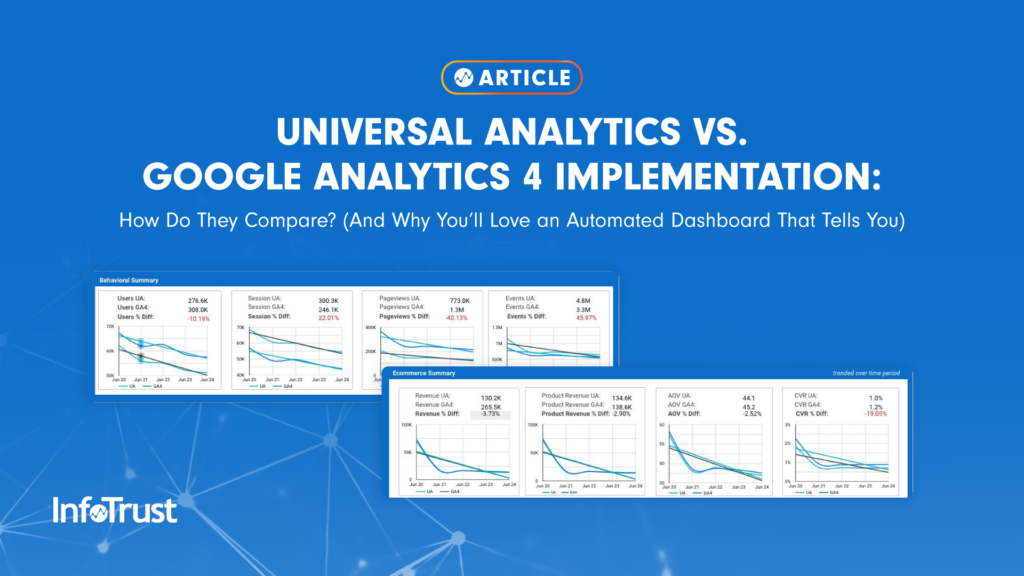

In the example below, we compare top-line metrics between the implementations to understand the differences and trend them out to see if the variance is changing over time. This is our first level of investigating that we want to ensure consistency on or have an answer as to why. Some differences may be methodologies in metric calculation, while others could be implementation-specific issues.

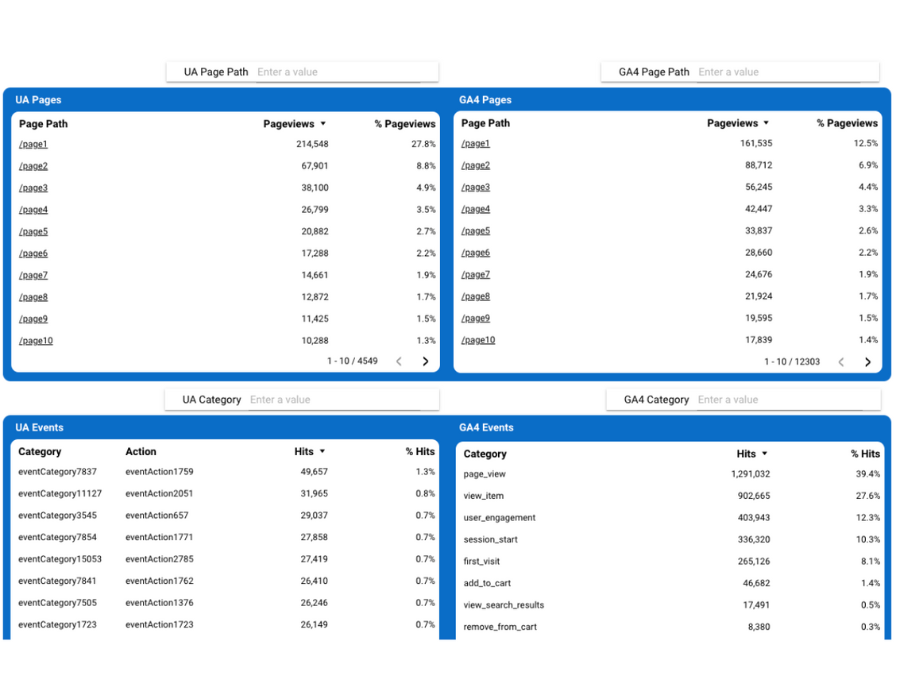

We also dig deeper into device type, pages, events, and channel data to help unpack what may be causing a discrepancy. Having this reporting automated means your team can spend more time unpacking the why, and less time downloading data and aggregating it for the platform.

It’s good practice to compare every metric and dimension and because reporting can be automated, why wouldn’t you? Our reporting then typically goes through custom metrics, dimensions, and events to identify consistency between platforms. This tends to be the longer tail of validation as your implementation becomes more complete over time.

A Final Word

Whether you choose to automate reporting with a partner like InfoTrust or internally, building a foundation of summary metrics will have benefits down the road as you start to transition old reports to using GA4 given that you’ll have most of the initial dataset built out.