What is A/B testing and when would you use it?

A/B testing, in simple terms, is an experimentation method that involves applying a change to one or more elements on your website and seeing if it improves conversion towards a specific target. The best time to run A/B tests is when you want to achieve a specific goal or improve your conversion rate, but don’t know exactly where to begin.

There are multiple other variations of experimentation possible (Multivariate experiments, Site redirects, etc), but A/B testing is recommended when you want to drown out the noise and identify 1 or 2 directly-observable causes of uplift. Using multivariate experiments or site redirects needs a lot of traffic and often doesn’t pinpoint the exact change that caused the outcome (unless you apply some advanced data science techniques). Having said that, if you have the opportunity (and traffic) to go for more advanced testing, by all means, do so! You just might uncover something that sequential A/B testing might not.

How does A/B testing work?

It’s a basic 6 step process:

- Determine the conversion you want to improve.

- Come up with a list of hypotheses with associated elements to change.

- Prioritize testing based on your hypotheses – we recommend the P.I.E. method!

- Setup and run your tests using Google Optimize or any other optimization and A/B testing tool. To run the test, you’ll show 2 sets of users (assigned at random) different versions of your site and see which group performs better towards your goal.

- Analyze your results.

- Refine and repeat if needed.

For more details on what A/B testing does, and how you’d run this 6 step process, see this webinar on Getting Started with A/B testing

CPG Use Cases and Testing Opportunities:

CPG organizations tend to underestimate the power of A/B testing. Sure, you may not always be able to pinpoint an uplift in terms of concrete sales numbers or revenue, but failing to realize the impact of a bad user experience on-site or the power of content to engage your customer and move them down the funnel can make or break your business.

So if you haven’t been using A/B testing until now (the free version of Google Optimize allows you to do up to 5 experiments simultaneously!), here are some areas you might want to consider:

The goal:

Improve the user experience on your site

The problem: We all know how frustrating it can be to go around in loops when navigating a site – having to fill in a form multiple times, navigate past an annoying popup, or even finding a link that’s unclickable or too small to click! You do not want your customers that have been acquired with so much thought and planning (and media investment) to simply land on your site and leave!

The testing opportunity: Test by changing forms, CTAs, link placements, and redirects as well as placing important content above the fold to minimize the need for scrolling.

The goal:

Increase customer engagement (more pages/session, lower bounce rate, higher time on page)

The problem: Have you ever landed on a site that just didn’t quite catch your eye? Was the main homepage banner a tad bit boring? Did you not find the content you were looking for? Did the whole experience scream ‘unappealing’? Did you end up in a fit of click-rage?

The testing opportunity: Test by changing images, section outlines, banners, and animations.

The goal:

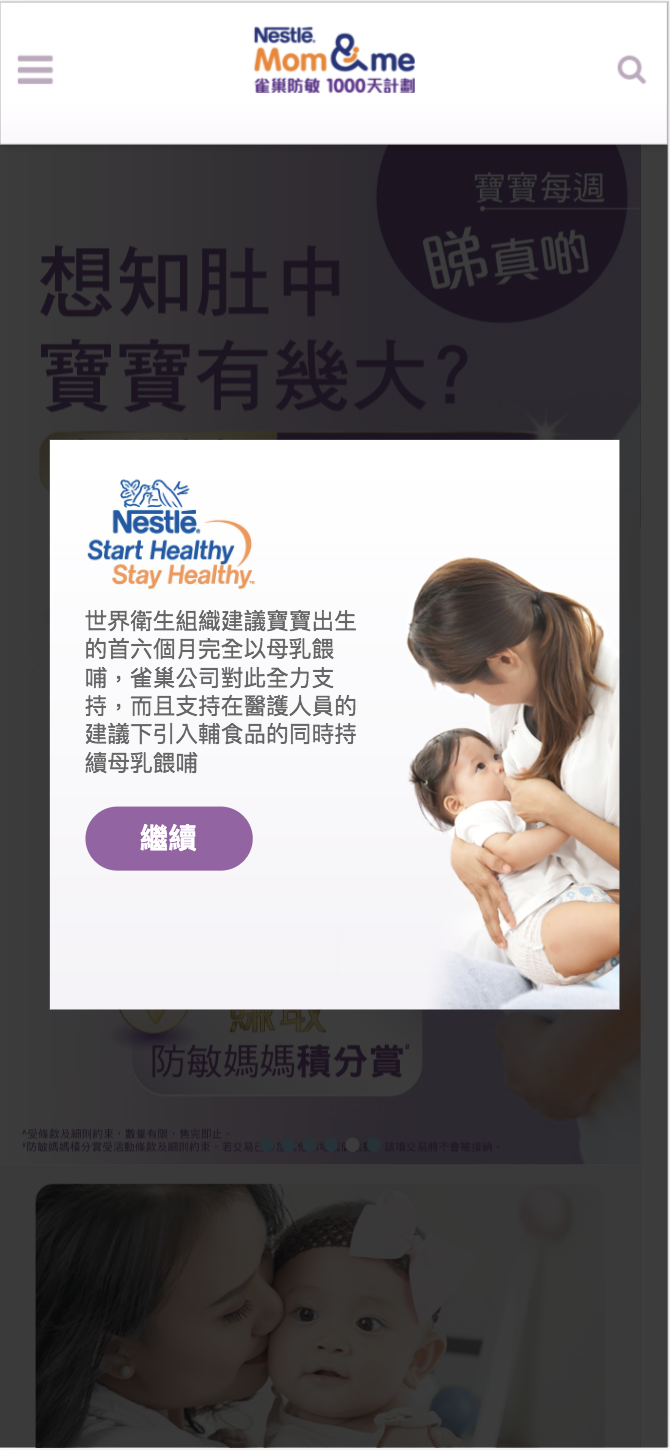

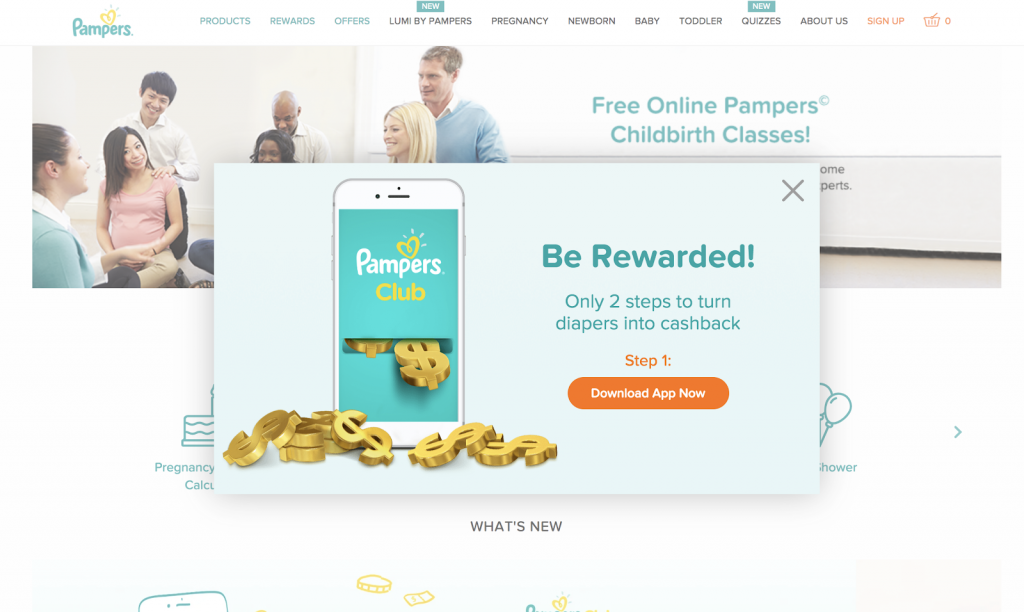

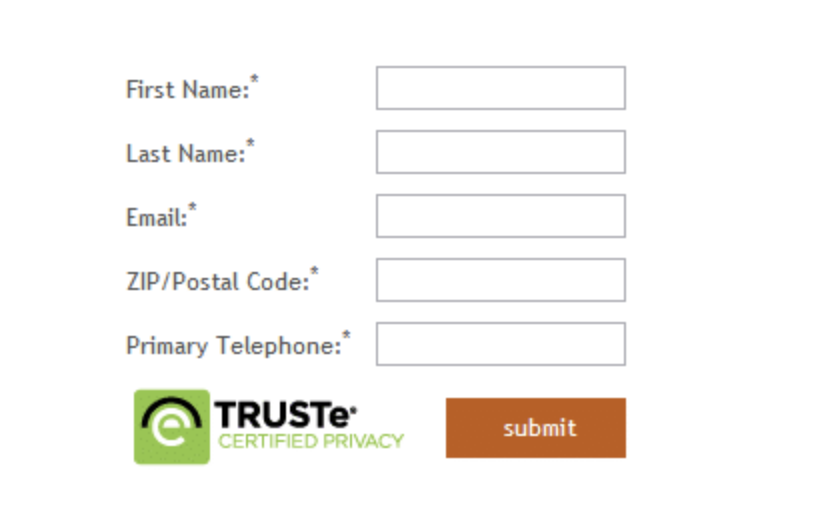

Grow subscribers to clubs/newsletters

The problem: Are your customers not registering on your site? Are they content with browsing as a guest? Do they not seem to want to hear from you beyond the one session? Maybe you want them to come back and be served a more personalized experience on-site, but how do you get them on board with this?

The testing opportunity: Test by changing form location, form steps, and difficulty, captchas, CTAs to fill the form, adding popups, adding clear callouts for benefits to registering, adding security seals to assure data privacy.

The goal:

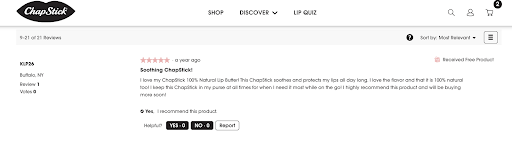

Encourage submissions of reviews and testimonials

The problem: You have some great products but people don’t know how great they really are! Harnessing the power of your existing customers to spread the word is invaluable. How can you get your current customers browsing your site to want to leave a review or testimonial?

The testing opportunity: Modify the ‘Leave a Review’ button color/font/text/placement. Additionally, test changes to the review form and submit process, call out benefits, & rewards for leaving reviews at other locations on-site.

The goal:

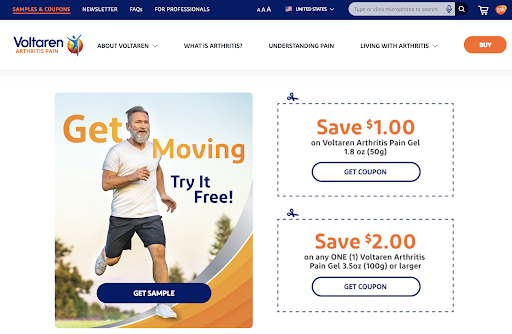

Increase coupon downloads

The problem: Customers don’t seem to want to download your coupons that offer them savings in-store. Is the coupon download section accessible? Is the download process easy? Is it visually appealing?

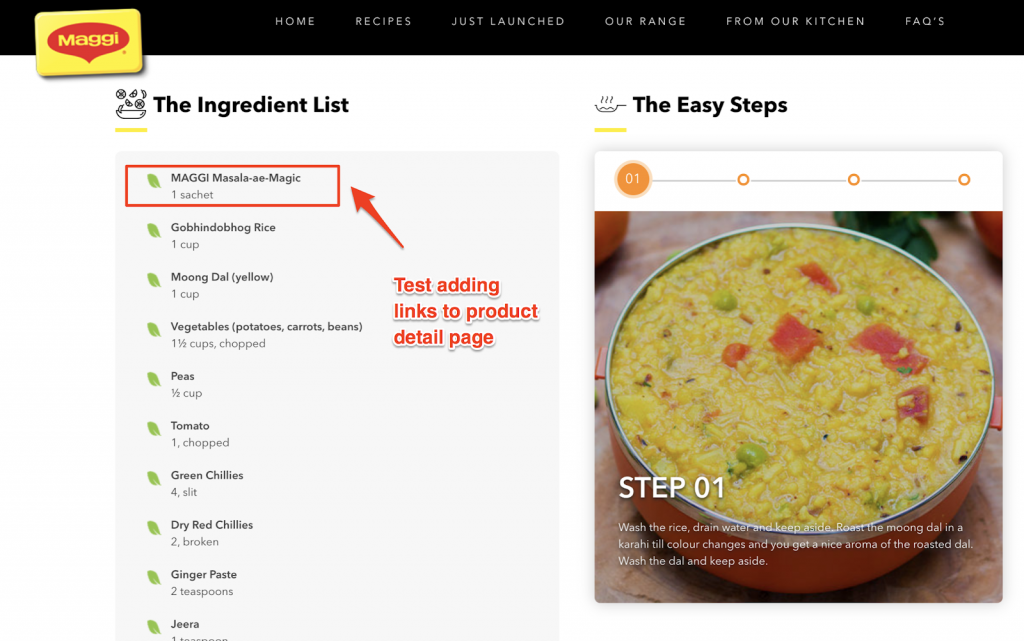

The testing opportunity: Test by making changes to CTA locations (try adding it on the product or recipe or How-To page instead of in a separate section), font, size, color, and form.

The goal:

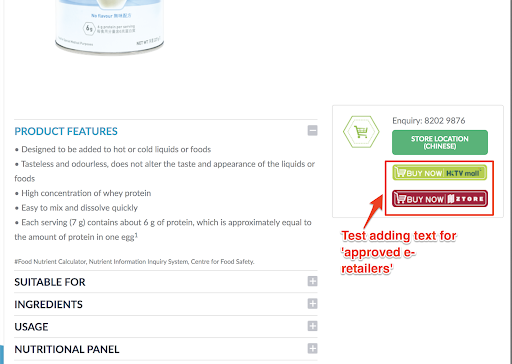

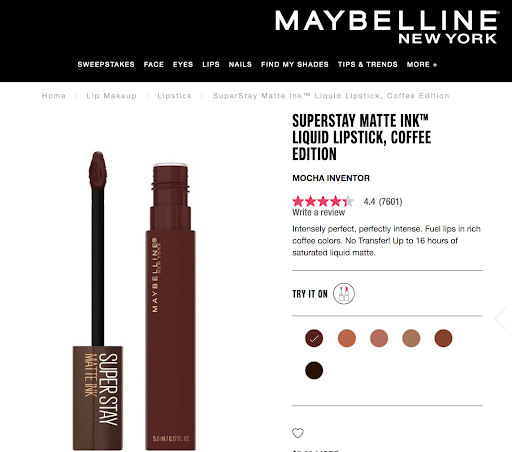

Encourage clicks to e-retailer

The problem: Similar to coupon downloads, do users just view the product details and not seem to want to click to purchase? Either that was not their intention, to begin with, or it’s not the right location to prompt them to purchase. To address the former, revisit your targeting and on-site page journey optimization.

The testing opportunity: Optimize product detail pages so they have all the relevant information they need to purchase and emphasize variants, availability, etc.Test by making changes to CTA locations (try adding it on the recipe page instead of on the product detail page), font, size, color, etc. Test by adding ‘approved provider’ labels next to the e-retailer CTA button.

The goal:

Increase Home-Page to Product-Page conversion rate

The problem: Users are visiting your website’s home page but not interested in seeing other sections such as Products or Articles. Is there a user experience issue or a disconnect in the on-site journey flow? Are users not being directed to the right landing page? Is the site navigation experience poor?

The testing opportunity: Test video homepage banners or modified images on the banner. Test CTA font, size, color for link clicks to high-value sections. Reduce text. Move CTAs above the fold. Replace prominent navigation bar headers with higher-value sections such as Products.

In all these cases, we recommend complementing your quantitative findings with the help of a qualitative tool such as SessionCam that will show you customer heatmaps and struggle areas as well. You can also ask your customers for feedback directly using Google Surveys or any other customer feedback tool.

What can you test on your CPG site?

CPG sites are traditionally content-based with minimal interactive elements beyond basic videos and quizzes. But, that doesn’t mean there’s a dearth of elements to test. Here are some unique/non-standard elements you can use as well:

- Popup for newsletter subscribe or join the club on a specific page

- Video Header or banner on the homepage

- Social proof (Eg: verified users in the Reviews and Testimonials section or received free products)

- Security Seals for e-payment and form submits (to reassure users that their data is safe)

- Smiling Faces in images

- Eye-direction in images pointing towards CTAs

- Faces vs Products on pages/banners – depending on the product

- Less text or changed copy on banners

- Reduction of clutter – adding/removing icons instead of text for nav bars, sections, etc.

- Use the availability heuristic to your advantage and emphasize low stocks to give a sense of urgency

- Changes to navigation bars on hover elements – content, size, color

- Changing colors to be more prominent/contrasting

- Emphasize product variant options and availability to give users choice

- Links to product pages and CTAs to e-retailers from recipe/content pages

- Different landing pages for different audience segments : Remember the audience segments I talked about in my previous post? If you can customize your ads with DCO, why should the landing page be totally different from the ad experience? Note: This will need a redirect test and personalization experiment, and can’t be achieved with A/B testing.

Are you doing A/B testing wrong? Here are some of our best practices for A/B testing on CPG Sites

If you’re going to run an A/B test, do it right! There’s nothing worse than using bad data to try to get good results!

Here are some common mistakes we’ve seen made when running A/B tests:

1) Before Testing

- Running an A/B test when you should be running a multivariate test or a redirect test instead

Sequential A/B testing can uncover great insights, but sometimes it’s a combination of changes that make the difference.

2) During Testing

- Not running the A/B test for a long enough time period

Wait for at least 1-2 weeks. If you don’t see enough traffic to generate a result by then, consider whether it is possible and worth your while to extend the experiment.

- Setting up too many objectives to optimize

You don’t want to muddy the waters before you’ve found the first speck of gold. Begin by selecting one key metric. While you’re at it, choose just one-page type on-site for testing and one hypothesis to be tested as well (aka element to be modified).

- Running multiple tests simultaneously with common traffic

Do not run more than 1 experiment on the same page at the same time. It amounts to the same as having too many variables that could have caused the outcome.

3) After Testing

- Testing once and never again

It’s sometimes all too easy to consider a result as final. Perhaps you’ve deployed it permanently already or you don’t have the budget to re-run the experiment. Maybe you don’t want to risk having a proven test result potentially get disproved. My advice is to do it anyway. Budget for multiple runs of the same experiment. Run it multiple times. Admit what you know and what you don’t know for sure. It’s better to revert back to the original variant sooner than later.

- Making assumptions about the consumer behavior that drove the result and not validating it with qualitative tools

This is the classic case of correlation, not causation. Never underestimate the ability of your minds to play tricks on you based on previous experiences and inherent biases. Use a qualitative tool (SessionCam, Hotjar, CrazyEgg) to see what your users actually did on-site instead or ask them directly in a survey.

- Considering the lack of a winner or an inconclusive result as a failed experiment

It’s far better to have tested and to have been found inconclusive than to have never tested at all! Now you know the original content is best at this point in time. Repeat the experiment again when you have more traffic or simply test another hypothesis.

- Failing to take action based on your results

You got your winner, and now it’s time to launch it permanently. First, complete the test by disabling the losing variant or enabling the winning variant only. Do you need to jump through hoops before you can do this? Plan ahead and make sure there are no obstacles to getting your winning variant to do the heavy lifting as soon as possible. If you don’t have a clear winner, plan, and launch the next experiment immediately. Not acting on results defeats the purpose.

More specifically for CPG sites:

1) Before testing

- Selecting the wrong kinds of pages for testing without understanding their value

Look for pages that are valuable for your business eg: Is the blog section as valuable as your coupon download or subscribe page?

- Failing to consider the target audience for that site (or page) when selecting which elements to change

CPG users are not all alike. Understand the users who would use that specific page first. For example, if it is a website for healthcare products for the elderly, consider changing elements to appeal to older users. eg: increased font size, less text, changes to navigation bars.

2) During testing

- Testing changes during non-standard timeframes (eg: holiday periods, off-peak seasons, known campaign periods)

There’s nothing worse than getting an uplift due to external factors (back-to-school, weather, salary day) vs what was actually tested upon.

- Testing only on websites and ignoring mobile

Since CPG is mostly about engagement and content, and as more consumers move onto mobile, this becomes extremely important for CPG sites.

3) After testing

- Not comparing to business results

CPG websites are often slightly removed from the endpoint of the purchase funnel. But that doesn’t mean you optimize the middle section and forget about the rest. It’s extremely important after you deploy a winning variant to see the impact it has on not only your on-site metrics but also your business results (this could be anything from an increase in brand health to an increase in coupon use in-store). While this may not be obvious or even attributable to online experimentation, at the very least you should be able to say that experimentation was an enabler to move consumers down the funnel.

Hopefully, this article covered some of the questions and pain points associated with doing A/B testing on CPG sites. InfoTrust would love to help you get started with your experimentation strategy and make the most out of your CPG websites.

One last note: Always be testing!